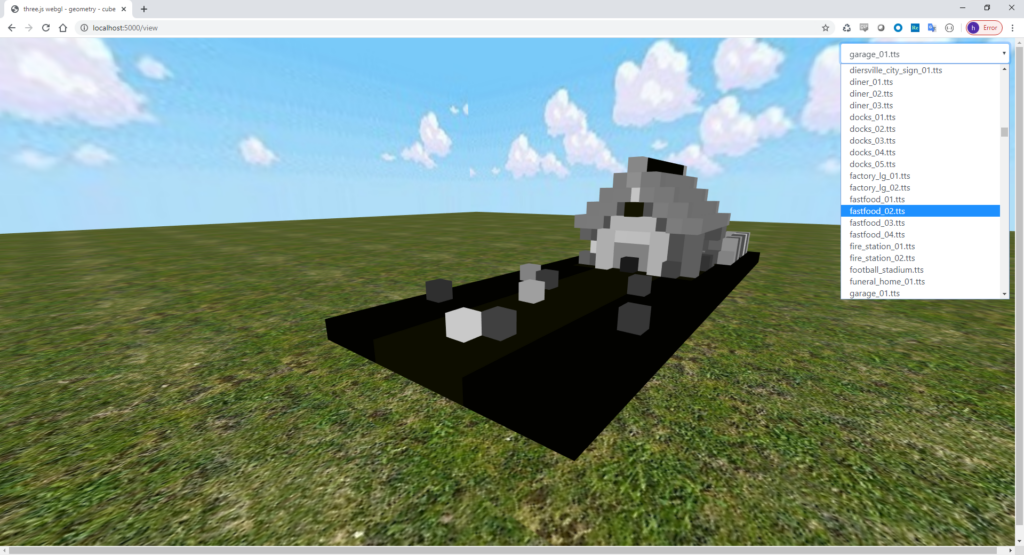

I’m working on another experiment using AI to generate game content. I’m attempting to use GPT-3 to create a text-based adventure game with actual graphics provided by VQGAN + CLIP. Here’s my first attempt at a “hand-made” POC adventure (original prompt stolen from AI Dungeon):

You are Jack, a wizard living in the kingdom of Larion. You have a staff and a spellbook. You finish your long journey and finally arrive at the ruin you’ve been looking for. You have come here searching for a mystical spellbook of great power called the book of essence. You look around and see several skeletons and ghosts of the dead. This is going to be dangerous. You need to find the book before you can leave, but you have to be careful, or you may end up a skeleton too.

Using another GPT-3 prompt I wrote to extract the setting from the text above, I got this:

An enchanted ruin in the kingdom of Larion

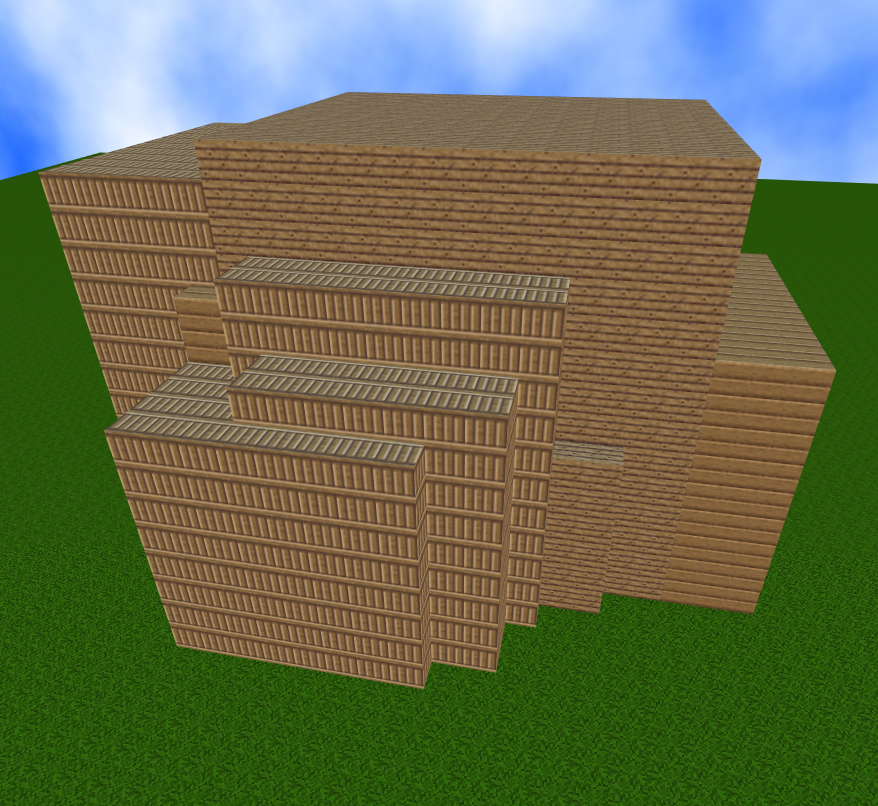

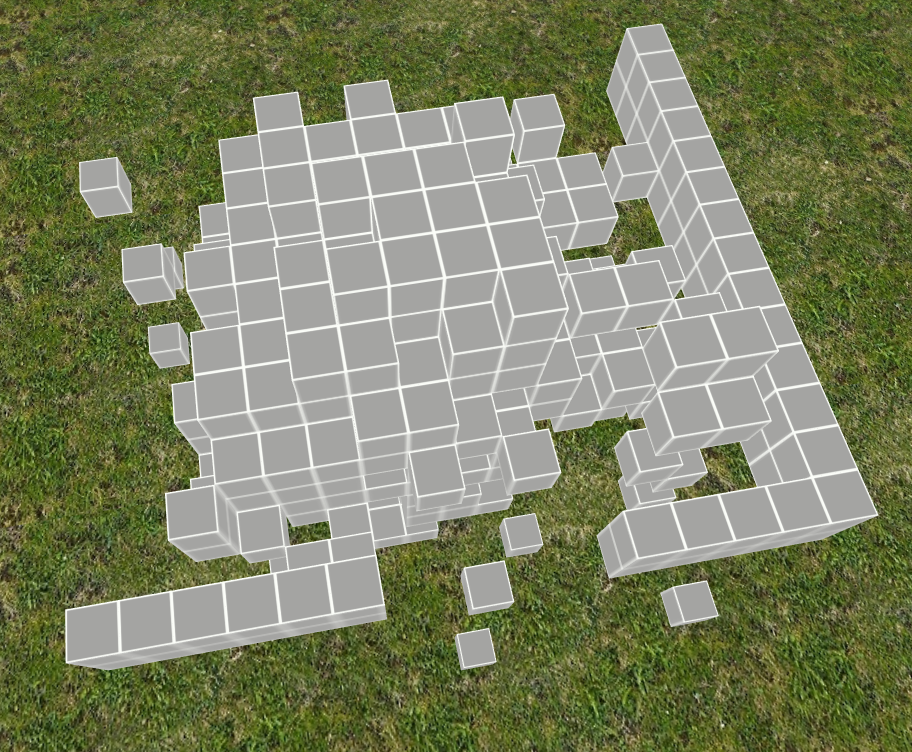

I then fed that into a Colab notebook which, after a few minutes, generated this:

Let’s see if we can keep this up. I need to tell GPT-3 what I’m going to do next. In order to keep generating the main storyline, the perspective has to be reversed, so in order to say something like:

I explore the ruins looking for a library.

Instead, you say:

You explore the ruins looking for a library.

Here’s the next sequence from GPT-3:

Suddenly, a ghost appears. It says, “I am the ghost of the old wizard and I will not let you pass. I will stop you with my magic.”

Now, we need to see what my setting extraction prompt does with this:

The ruins of a wizard’s tower in the kingdom of Larion.

Not exactly what we wanted… I need to somehow rewrite my prompt so that it favors the latest actions of the players. After some experimentation, I got this:

A wizard searching for a magical spellbook in a ruined ruin called the Book of Essence.

This would have been a better prompt to use when we generated the first image, but what we want is a simple summary of what just happened in our story, so something like:

The ghost of the old wizard appearing in an enchanted ruin in the kingdom of Larion.

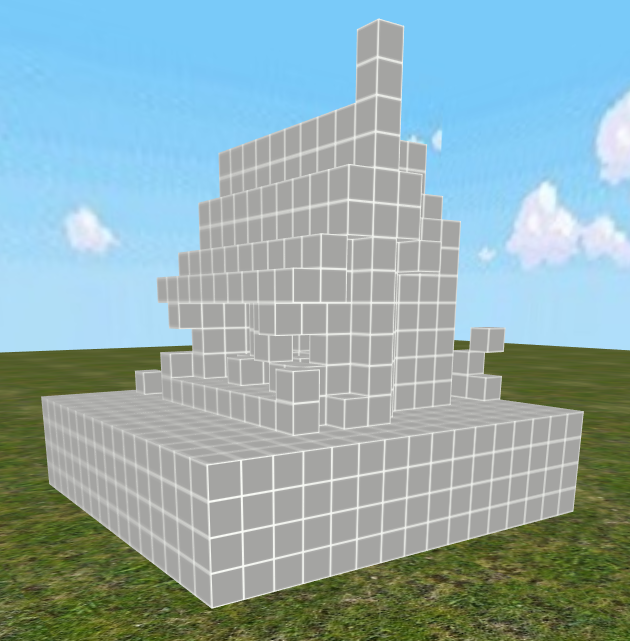

I was able to get GPT-3 to focus on the current events in the story by simply providing it with examples of this in the setting extraction prompt, and I managed to generate this:

A ghost appearing to a wizard in a kingdom of Larion.

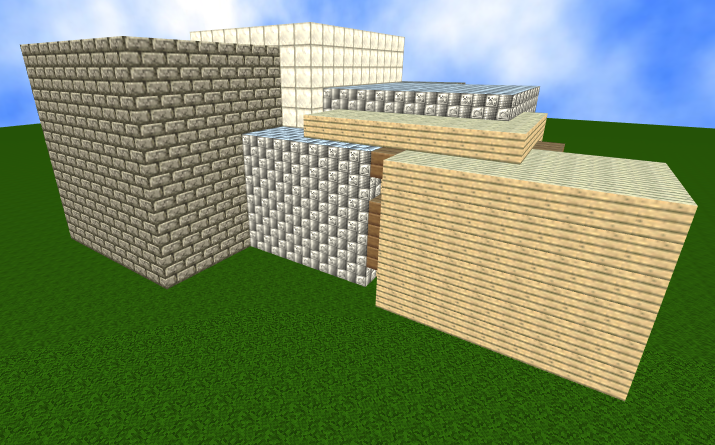

That’s more like it, and although it does not include the true setting, which in this case should be a ruin in the kingdom of Larion, we now have a better idea of the type of prompt we need to do this. Here’s the result from the image generator using the new prompt from GPT-3:

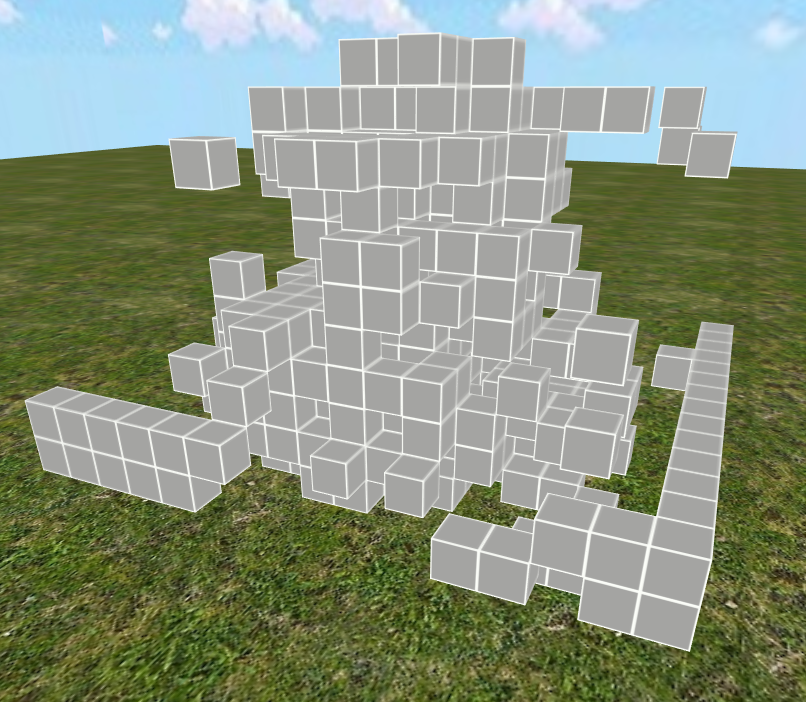

Despite the third-grader aesthetic, the novelty is undeniable. Both of these images seem relevant enough to the story to make me think that this experiment could be made into an actual game. Given that the sequence above was generated manually, my plan now is to attempt to automate this process and see where I can take it, but, unless you like waiting 10 minutes for each frame of gameplay to be generated, it won’t be featured on Steam any time soon. 😉